Best AI Tools for Kubernetes in 2025: Optimize, Troubleshoot & Scale with AI

Managing Kubernetes, especially in enterprise environments, has outpaced what most humans can manage manually. There are endless CRDs, many YAML files, a bunch of Helm charts, and hundreds of clusters that make even the most experienced engineers reach their cognitive limit.

To avoid burnout, reduce operational risk, and make sure your systems are reliable without needing you to watch them 24/7, you need AI tools to help with the repeatable tasks you usually go through.

AI tools that are powered by ChatGPT, Claude, Claude Code, and AI agents in general, that can read logs, optimize costs, and manage some of your workflows, will reduce all of these issues, while equipping you with everything you need to ensure your clusters are up and running.

In this article, we will explore the top 5 AI tools that you can use in your Kubernetes ecosystem:

- K8sGPT

- Cast AI

- Lens Prism

- Kubeflow

- KServe

TL;DR?

Why do you even need AI assistants in Kubernetes?

You might be asking yourself if you really need AI assistants in Kubernetes. The short answer is no, and you can keep doing what you are doing today. In reality, though, Kubernetes was never designed to be simple. It’s a powerful tool built to manage distributed systems which gives you:

- Too much noise: You get logs, events, and metrics coming into your clusters from every direction. Finding the one line that matters in this great amount of data is like searching for a needle in a field of haystacks.

- Too many moving parts: You are using Pods, Deployments, CRDs, Operators, ConfigMaps, Secrets, and each of them has its own way of failing.

- Responsibility that is spread too thin: Everybody is using Kubernetes, from business executives to software developers to operations, which actually means that no one has the full picture anymore of what is happening inside your clusters

Having so many things to take care of will make your team overwhelmed, and you will need to keep hiring people just to ensure operations in your Kubernetes clusters. An AI copilot will solve this, and right now, you can think of it as a junior engineer, who doesn’t sleep, doesn’t complain, and doesn’t get tired of going through logs. It’s important to understand that it is not meant to replace your engineers, but to equip them with everything they need to be successful. Junior engineers can also benefit from AI copilots as they can see how they reason and tackle different issues, and at the same time, by being in touch with senior engineers, they can understand where these copilots are wrong and need improvements.

Top AI tools

1. K8sGPT

K8sGPT is a CNCF project that is all about troubleshooting. It is a command-line tool, that can be easily installed on any operating system, that scans your cluster for common problems, and it can also use LLMs to explain what’s wrong with your resources in plain English.

By running k8sgpt analyze you see, at a glance, what are the top things you will need to fix:

k8sgpt analyze

AI Provider: AI not used; --explain not set

0: Node lens-kind-control-plane()

- Error: lens-kind-control-plane has condition of type MemoryPressure, reason NodeStatusUnknown...

- Error: lens-kind-control-plane has condition of type DiskPressure, reason NodeStatusUnknown...

- Error: lens-kind-control-plane has condition of type PIDPressure, reason NodeStatusUnknown...

- Error: lens-kind-control-plane has condition of type Ready, reason NodeStatusUnknown...

1: Deployment default/nginx-deployment()

- Error: Deployment default/nginx-deployment has 3 replicas in spec but 4 replicas in status...

2: Deployment cert-manager/cert-manager-cainjector()

- Error: Deployment cert-manager/cert-manager-cainjector has 1 replicas but 0 are available with status running

3: Deployment default/argo-cd-1755793450-server()

- Error: Deployment default/argo-cd-1755793450-server has 1 replicas but 0 are available with status running

4: Deployment kube-system/coredns()

- Error: Deployment kube-system/coredns has 2 replicas but 0 are available with status running

By adding an AI API key to K8sGPT you enable the k8sgpt explain command, that will provide helpful diagnosis on what is happening with your Kubernetes resources.

For teams doing ops from the terminal, it provides immediate value by making single-cluster troubleshooting faster. You can use it, of course, for all of your clusters, but you will need to switch contexts, or build custom scripts.

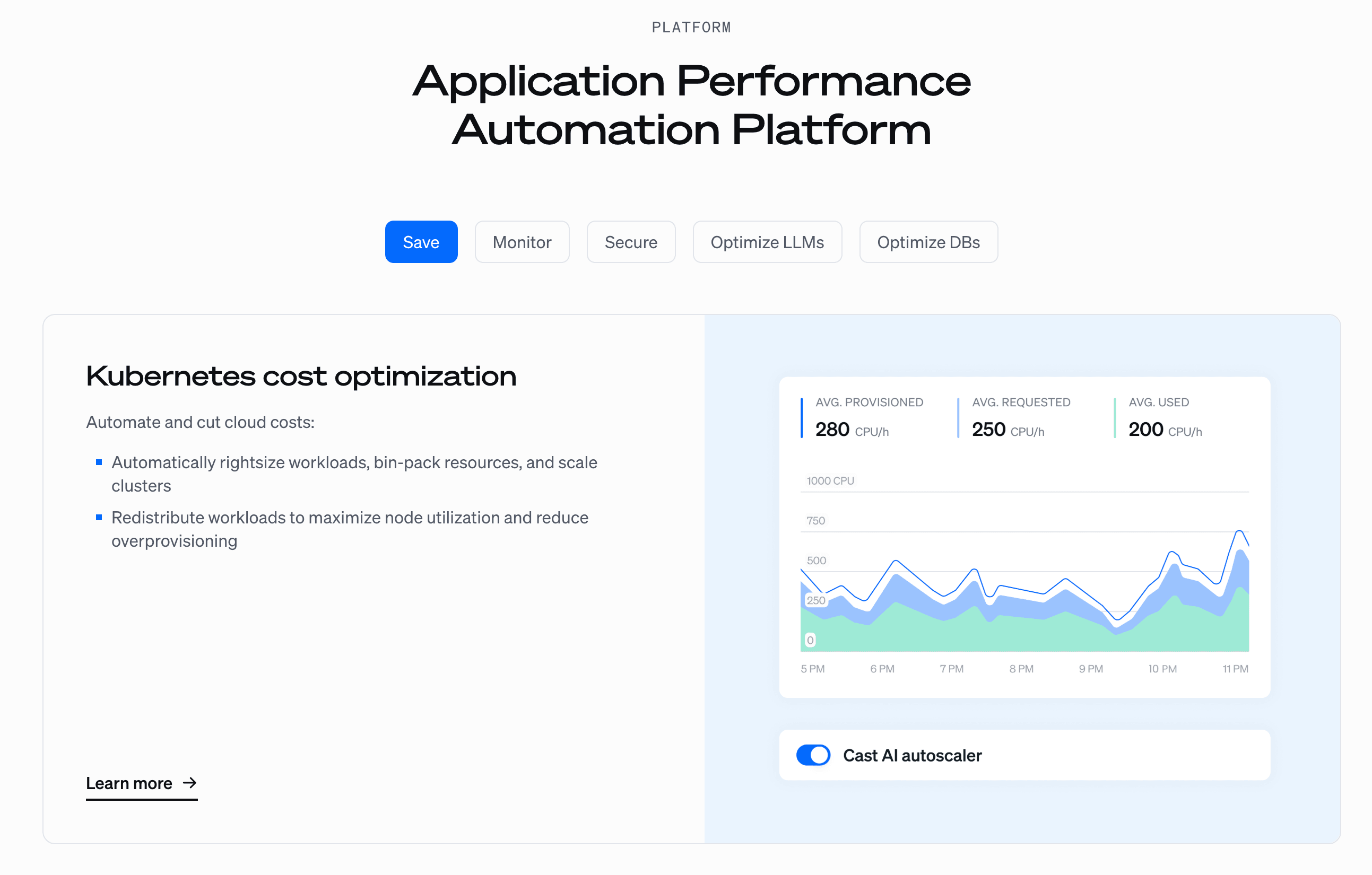

2. CAST AI

CAST AI automates Kubernetes cost optimization so you don’t have to walk through all your Kubernetes resources to understand what are the ones that are causing waste. It analyzes your workloads in real-time and continuously adjusts your infrastructure to be as cheap and efficient as possible.

With CAST AI, you get automatic right sizing of your pods and nodes, cheaper spot instances instead of expensive on-demand ones, and workload optimization. Instead of getting recommendations of how to adjust these parameters, CAST AI does this automatically for you.

Some testimonials say they’ve seen their cloud spend being reduced by up to 60%, without losing any performance.

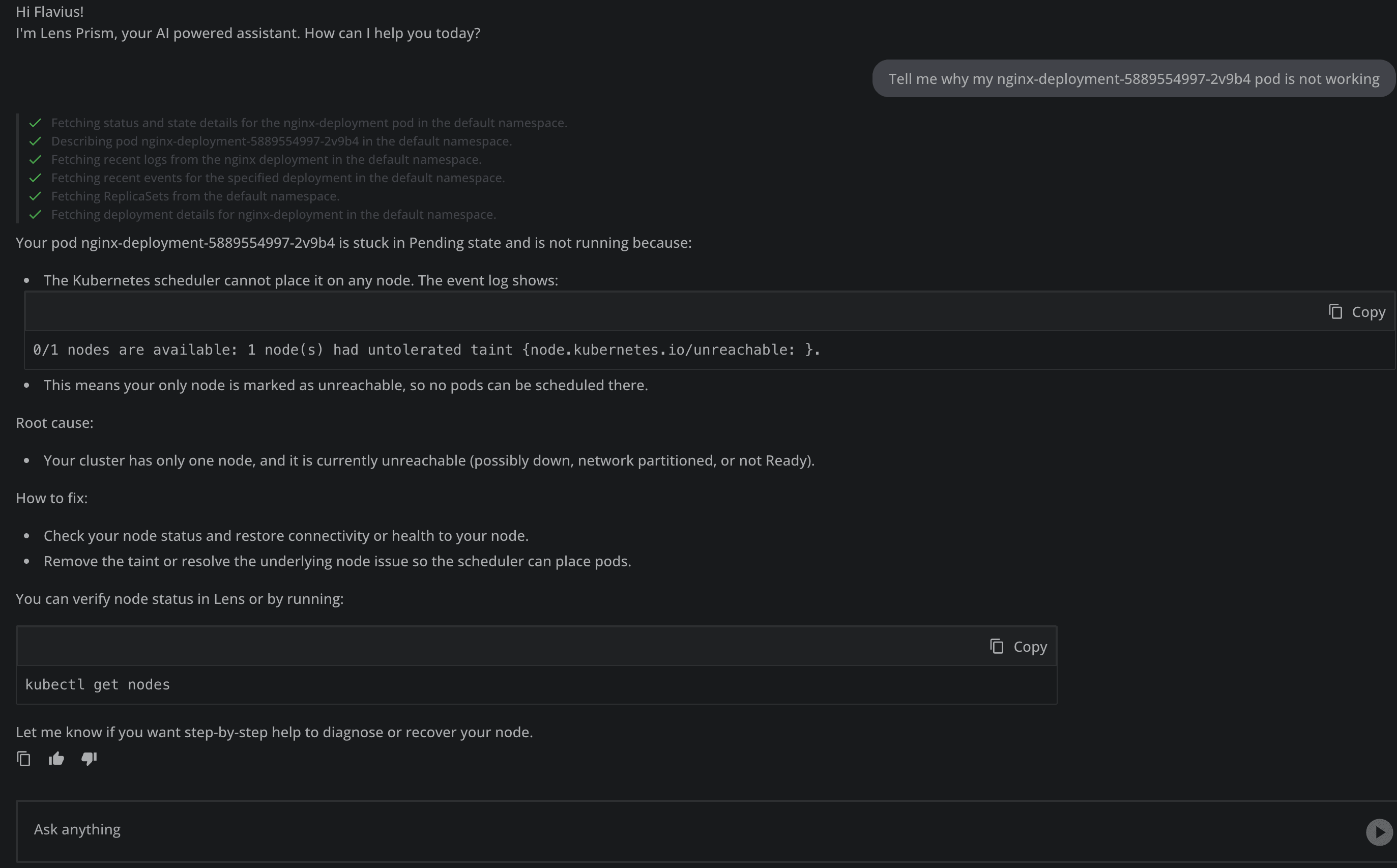

3. Lens Prism

Lens Prism is your AI copilot inside of Lens Kubernetes IDE. If you are running multiple K8s clusters, switching contexts simply doesn’t scale, Being a part of Lens Kubernetes IDE lets you take advantage of all the powerful features that Lens has to offer, such as one-click integrations with AWS EKS, Helm Charts management, easily attach to a pod, connect to its shell, and even check its logs in real time.

With Lens Prism, you can ask plain-English questions like what’s happening with my pods, and it will provide you detailed insights about how to fix these issues.

Lens Prism is cluster-aware, namespace-aware, and gives clear answers inside of a clean UI. It lowers the barrier to entry, making your developers self-sufficient, and frees up your ops team from being a bottleneck.

4. Kubeflow

If you are building AI on top of Kubernetes, Kubeflow is here to help. It is an open-source MLOps platform that can be used for the entire machine learning lifecycle.

With Kubeflow you get:

- Managed notebooks for experimentation

- Pipelines for creating scalable and repeatable ML workflows

- Trainers for distributed model training

Kubeflow makes deploying and managing ML on Kubernetes simple and portable, so you can easily train your models on your laptops, and then scale them out on a massive cloud cluster without making any changes to your workflow.

5. KServe

KServe is used in conjunction with Kubeflow, as it helps you deploy trained models as production-grade APIs. It became a standard for deploying and serving ML models on Kubernetes, being designed for high-performance and high-scalability use cases.

KServe handles the hard parts of serving like serverless autoscaling, canary rollouts for safe updates, and providing a standardized protocol for inference across frameworks like TensorFlow, PyTorch, and Hugging Face.

Which AI tool should you pick?

Picking your AI copilot depends entirely on your use case. For unified command center and developer experience, Lens Prism is the top choice, as it empowers your entire team to manage multiple clusters safely and easily from one place.

If you are interested in command-line troubleshooting, K8sGPT is a great starting point, as it will speed up incident response for your cluster issues.

For building and serving ML Models, Kubeflow and KServe work really well together, and if you are interested in cost and infrastructure optimization, CAST AI is your answer.

Most teams will use the majority of these tools together, activating Lens Prism as their main interface, CAST AI to reduce costs, and Kubeflow and KServe to power your AI workloads.

AI in Kubernetes predictions

AI agents will become more capable in the next period, and they will be more and more used inside your Kubernetes clusters. While now everyone knows that in some cases Agentic AI might be a liability because of hallucinations and the way the applications are built, in the future, they will become more stable, and with the correct rules in place, they will be able to fully automate those repeatable tasks that no one wants to do.

Kagent, for example, is an open-source project that runs AI agents inside your Kubernetes clusters, handling different types of workflows and troubleshooting like a junior DevOps engineer.

Standards like the Model Context Protocol (MCP) are explored to give AI agents live awareness of Kubernetes resources, so they don’t operate blindly, but with the same type of context you would have as an engineer.

While we are not 100% there, these things will be here faster than you can imagine.

Key points

Kubernetes isn’t getting simpler, but it is getting smarter. With these AI copilots you can stop firefighting and equip your teams with everything they need to focus on providing real value.

If you are just starting out with copilots, pick the tool that solves your biggest pain point, and then layer in the rest as your needs grow.

If you need a tool that can help you with understanding everything that is happening inside your Kubernetes clusters that provides real time analytics and diagnostics, Lens Kubernetes IDE, and Lens Prism are here to help.

Download Lens Kubernetes IDE today to get the most out of your Kubernetes management.