How to manage Kubernetes Deployments: A Practical Guide

Managing Kubernetes deployments is one of those tasks that sounds straightforward until you're actually doing it. You've got pods to manage, replicas to scale, updates to roll out, and when things go sideways, you need to fix them fast.

In this article, we'll walk you through the essential deployment management tasks you'll encounter.

What is a Kubernetes Deployment?

A Kubernetes deployment is basically your application's instruction manual. It tells Kubernetes what containers to run, how many copies you want, and how to handle updates. The beauty of deployments is that they're declarative: you tell Kubernetes what you want, and it figures out how to get there.

Deployments manage your pods through replica sets, automatically handling the messy details of keeping your application running. If a pod crashes, Kubernetes spins up a new one. If you need to update your app, it gradually rolls out the new version. This automatic self-healing is one of the main reasons you might be using Kubernetes in the first place.

Creating and updating deployments

You can create your deployments imperatively, or declaratively using YAML manifests. To create a deployment, you define your container image, replica count, resource limits, and update strategy. Then you apply it with kubectl and Kubernetes makes it happen.

The real power comes from treating your deployment manifests like code. Keep them in version control, make changes through pull requests, and you've got a complete audit trail of what changed and when. This GitOps approach will prevent a lot of production headaches.

Scaling your applications

Scaling is probably the most common deployment task you'll do. If you need to handle more traffic, you can simply bump up your replica count, and if your traffic gets reduced, you can easily scale it back to save resources.

You can scale manually when you know traffic patterns ahead of time, or set up horizontal pod autoscaling to handle it automatically based on CPU, memory, or custom metrics. For most production workloads, however, you should implement autoscaling, because it reacts faster than you can and doesn't need you to be awake at 3 AM.

Updates and Rollbacks

When you update an application, by default, Kubernetes uses a rolling update strategy, in which it gradually replaces old pods with new ones, while keeping all of your services available. You can implement several update strategies that are described in this article, and you should always take advantage of the best ones depending on your budget and use cases.

Monitoring deployment health

In Kubernetes, you have built-in status information that shows you replica counts, rollout progress, and any conditions that might require your attention. You should check your deployment health regularly to catch issues before they impact your users.

Ensure you watch for key metrics like pod readiness, how often your pods are restarting, resource consumption and whether rollouts are progressing according to plan. You can do all of these things manually, but by integrating proper monitoring tools and alerting into your toolchain, will give you even deeper visibility into what’s actually happening inside your cluster. Check out how to set up Prometheus and Grafana in Kubernetes.

Best practices for managing your Kubernetes deployments

There are several best practices that you should take into consideration when you are working with Kubernetes deployments:

- Always use declarative configuration: Manage your deployments with YAML manifests that are added in version control and deployed using GitOps. Even though

kubectlcommands might feel faster, they are almost impossible to reproduce, and auditing transforms into a nightmare - Set resource requests and limits: By using requests you can ensure proper scheduling, while limits prevent your pods from taking down your clusters.

- Configure health checks: Implement liveness probes and readiness probes to ensure your applications are running properly

- Use meaningful labels: Labels are key to query, filter, and even manage your resources

- Plan your update strategy: Depending on your resources, and the nature of your applications you can implement different deployment strategies. Critical services should always take advantage of blue-green, canary, or even sophisticated rollout strategies (e.g. using Argo Rollouts), while stateless non-critical applications can work with the default rolling updates

- Use ConfigMaps for non-sensitive configuration in your applications, and Secrets for passwords, API keys, and other sensitive data: This means that your secrets and configuration details are kept out of your container images, and they will be also kept out of version control as well.

- Implement proper secret management: For production use cases, implement proper secret management solutions like HashiCorp Vault, OpenBao, or AWS Secrets Manager that offer encryption and access control

When to use StatefulSets/DaemonsSet instead

Deployments should be your go-to for stateless applications, but knowing when to use StatefulSet and DaemonSets will save you from fighting against the wrong tool.

If you are running databases, messages queues, or anything that maintains state, you will need to use StatefulSets, as they provide stable network identities and persistent storage that survives pod rescheduling.

On the other hand, when you are using logging agents that collect logs from every node, or you need monitoring agents like Prometheus node exporters, or if you have a use where you need something running on every node in your cluster, DaemonSets are the answer.

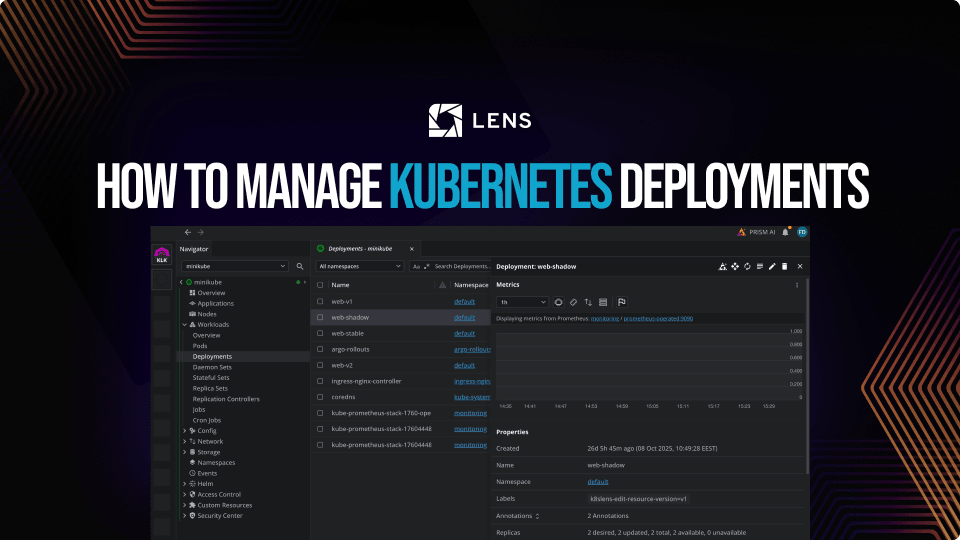

Managing deployments with Lens Kubernetes IDE

Even if you are using just a handful of Kubernetes clusters, managing deployments can quickly become time consuming and error-prone if you use just plain kubectl.

With Lens Kubernetes IDE you get a visual interface that shows you everything that is happening at a glance inside your deployments, and switching from a cluster to another is just a click away, rather than writing multiple kubectl commands.

Troubleshooting is even easier as you get pod details, logs, and configurations instantly, and if you want to enable even faster problem solving, you can leverage Lens Prism, the built-in AI assistant inside of Lens.

Check out how Lens Prism can help you troubleshoot your issues:

With Lens Kubernetes IDE, scaling is as simple as changing a number in the UI. There is no need to remember kubectl scale syntax, or edit YAML files (but if you still want to edit them, Lens offers a built-in IDE for that). As soon as you adjust the replica counts, you can watch your Kubernetes cluster respond in real-time, and new pods are spinned up fast, or replicas gracefully terminate:

Restarting your deployments in Lens is just a click away, you’ll just need to trigger the restart and then monitor the rolling restart process to ensure everything transitions smoothly without any downtime:

Lens Kubernetes IDE goes beyond deployment operations, as you get an integrated terminal with cluster context already configured, real-time metrics and monitoring, log streaming from multiple containers simultaneously, built-in features for enterprise such as teamwork, SSO, and SCIM and more.

Wrapping up

Effective Kubernetes deployment management comes down to understanding the fundamentals, implementing solid practices, and using the right tools. Master declarative configuration, scaling strategies, update mechanisms, and monitoring, and in the end, let good tooling amplify your productivity.

Always develop workflows that make deployment management efficient, repeatable, and reliable. Start with these fundamentals, layer in proven practices, and leverage tools that actually help you get work done.